EANN

2007 |

10th International Conference on Engineering Applications of Neural Networks |

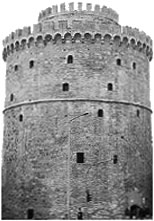

29-31 August 2007

Thessaloniki, Hellas

Invited speaker 1

"Applying PCA neural models for the blind separation of signals" It is well known that the biologically motivated Hebbian self-organizing principle is related to Principal Component Analysis, a classical second-order feature extraction method. Furthermore, various neural models have been proposed in the past implementing a wide range of PCA extensions including Nonlinear PCA (NLPCA) and Oriented PCA (OPCA). Nonlinear PCA, in particular, has been linked to Independent Component Analysis, a higher order method for the analysis of linear, instantaneous mixtures into independent constituent components. ICA is a class of Blind Signal Separation (BSS) problems. It is widely believed that PCA is good only for the spatial pre-whitening of the input signals and it cannot be applied to the BSS problem. In this talk we shall demonstrate the connection between PCA with the solution of the BSS problem under typical second-order assumptions. We shall show that PCA or Oriented PCA, in conjunction with temporal filtering, lead to the solution of BSS. The selection of the optimal filter is still an open issue. The advantage of using PCA neural models is that they can be directly applied to large scale problems without explicitly computing the data covariance matrix. The method is tested using simulated data and compared against standard second order methods.

Invited speaker 2

"Modal Learning in Neural Networks" The trend in neural computing is to have a single weight update equation or learning method. There are now several forms of learning commonly utilised in various types of neural networks. These include error minimisation techniques including the famous backpropagation; Bayesian methods; and Learning Vector Quantization; as well as related methods such as Support Vector Machines. Hybrid systems do combine types of learning by utilising more than one network, often in a modular framework. However, it is uncommon for learning methods to be integrated into a single network; even though there is no biological or cognitive evidence on which to base the assumption that neurons are restricted to a single learning mode. There are several reasons to explore modal learning in neural networks. Modal refers to networks in which neurons adopt one of two or more learning modes (such as different weight update equations) and may swap between them during learning. One motivation is to overcome the inherent limitations of any given mode; another is inspiration from neuroscience, cognitive science and human learning; a third reason is the challenge of dealing with non-stationary input data, or time-variant learning objectives. Three modal learning ideas will be presented, with examples: The Snap-Drift Neural Network (SDNN) which toggles its learning between two modes, either unsupervised or guided by performance feedback (reinforcement); a general approach to swapping between several learning modes in real-time; and an adaptive function neural network, in which adaptation applies simultaneously to both the weights and the individual neuron activation functions. Examples will be drawn from a range of applications such as natural language parsing, speech processing, geographical location systems, the Iris dataset, user modelling, optical character recognition and virtual learning environments.

Invited speaker 3

"Towards knowledge-based neural networks: At the junction of fuzzy sets and neurocomputing" In this talk, we focus on the fundamentals and development issues of logic-driven constructs of fuzzy neural networks. These networks constitute an interesting conceptual and computational framework that benefits from the establishment of highly synergistic links between the technology of fuzzy sets and neural networks. The evident advantages of knowledge-based networks are twofold. First, the transparency of neural architectures becomes highly relevant when dealing with the mechanisms of efficient learning which is enabled by the fact that domain knowledge could be easily incorporated in advance prior to any learning. This becomes possible given the compatibility between the architecture of the problem and the induced topology of the neural network. Second, once the training has been completed, the network is highly interpretable and thus directly translates into a series of truth-quantifiable logic expressions formed over a collection of information granules. The design process of the logic networks synergistically

exploits the principles of information granulation, logic computing

and underlying optimization including those biologically inspired

techniques (such as particle swarm optimization, genetic algorithms

and alike). We elaborate on the existing development trends, present

key methodological pursuits and algorithms as well as discuss some

example applications. In particular, we show how the logic blueprint

of the networks is supported by the use of various constructs of

fuzzy sets including logic operators, logic neurons, referential

operators and fuzzy relational constructs.

|

Organisational and financial support

05-Feb-2007 12:07

design by jonjon

Konstantinos I. Diamantaras

Konstantinos I. Diamantaras Dominic

Palmer-Brown

Dominic

Palmer-Brown Witold Pedrycz

Witold Pedrycz